以 AMS 注册到 servicemanager 为例讲解服务注册到 SM 的流程。

相关代码路径

ServiceManager.addService() AMS 的注册是在 setSystemProcess() 函数中:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 public void setSystemProcess () { try { ServiceManager.addService(Context.ACTIVITY_SERVICE, this , true , DUMP_FLAG_PRIORITY_CRITICAL | DUMP_FLAG_PRIORITY_NORMAL | DUMP_FLAG_PROTO); ServiceManager.addService(ProcessStats.SERVICE_NAME, mProcessStats); ServiceManager.addService("meminfo" , new MemBinder (this ), false , DUMP_FLAG_PRIORITY_HIGH); ServiceManager.addService("gfxinfo" , new GraphicsBinder (this )); ServiceManager.addService("dbinfo" , new DbBinder (this )); if (MONITOR_CPU_USAGE) { ServiceManager.addService("cpuinfo" , new CpuBinder (this ), false , DUMP_FLAG_PRIORITY_CRITICAL); } ServiceManager.addService("permission" , new PermissionController (this )); ServiceManager.addService("processinfo" , new ProcessInfoService (this )); ServiceManager.addService("cacheinfo" , new CacheBinder (this ));

通过调用 ServiceManager.addService 来注册服务到 ServiceManager。

1 2 3 4 5 6 7 8 9 10 public static void addService (String name, IBinder service, boolean allowIsolated, int dumpPriority) { try { getIServiceManager().addService(name, service, allowIsolated, dumpPriority); } catch (RemoteException e) { Log.e(TAG, "error in addService" , e); } }

1.1 getIServiceManager() - 获取 SMP 1 2 3 4 5 6 7 8 9 10 11 12 private static IServiceManager sServiceManager; ... private static IServiceManager getIServiceManager () { if (sServiceManager != null ) { return sServiceManager; } sServiceManager = ServiceManagerNative .asInterface(Binder.allowBlocking(BinderInternal.getContextObject())); return sServiceManager; }

1.1.1 BinderInternal.getContextObject() - 获取封装有 sm 的BpBinder 的 BinderProxy 1 2 public static final native IBinder getContextObject () ;

此处调用的是 native 函数,注册流程在 Android_Binder进程间通信机制01 中的第六小结已经讲过,再简单说下注册流程:

1 2 3 4 5 6 7 8 sequenceDiagram app_main ->> app_main:main() app_main ->> AndroidRuntime:start() AndroidRuntime ->> AndroidRuntime:startReg() AndroidRuntime ->> AndroidRuntime:register_jni_procs() AndroidRuntime ->> android_util_Binder:register_android_os_Binder() android_util_Binder ->> android_util_Binder:int_register_android_os_BinderInternal(env) android_util_Binder ->> android_util_Binder:gBinderInternalMethods

app_main.main() ——> AndroidRuntime.start() ——> AndroidRuntime.startReg() ——> AndroidRuntime.register_jni_procs() ——> android_util_Binder.register_android_os_Binder() ——> android_util_Binder.int_register_android_os_BinderInternal(env) ——> android_util_Binder.gBinderInternalMethods:

1 2 3 4 5 6 7 static const JNINativeMethod gBinderInternalMethods[] = { { "getContextObject" , "()Landroid/os/IBinder;" , (void *)android_os_BinderInternal_getContextObject }, { "joinThreadPool" , "()V" , (void *)android_os_BinderInternal_joinThreadPool }, ... };

可以看到实际调用的是 android_os_BinderInternal_getContextObject 方法:

1 2 3 4 5 6 static jobject android_os_BinderInternal_getContextObject (JNIEnv* env, jobject clazz) sp<IBinder> b = ProcessState::self ()->getContextObject (NULL ); return javaObjectForIBinder (env, b); }

主要做了两件事:

ProcessState::self()->getContextObject(NULL):获取 handle 值为 0 的 BpBinder,BpBinder 是 native 层的 binder 对象,详见 Android_Binder进程间通信机制02-ServiceManager_启动和获取 第 2.2 小节;

javaObjectForIBinder(env, BpBinder):将 IBinder 转为 java 对象,即 BinderProxy 对象,转换过程就是将 IBinder 的指针(long 类型)存储在 BinderProxy 的 mNativeData 中;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 jobject javaObjectForIBinder (JNIEnv* env, const sp<IBinder>& val) ... BinderProxyNativeData* nativeData = new BinderProxyNativeData (); nativeData->mOrgue = new DeathRecipientList; nativeData->mObject = val; jobject object = env->CallStaticObjectMethod (gBinderProxyOffsets.mClass, gBinderProxyOffsets.mGetInstance, (jlong) nativeData, (jlong) val.get ()); ... return object; }

参数 val 是 BpBinder 对象的指针引用,赋值给 nativeData->mObject,而 nativeData 是一个指向 BinderProxyNativeData 结构体变量的指针,在后面会赋值给 BinderProxy 的 mNativeData 变量;

gBinderProxyOffsets 的赋值在 int_register_android_os_BinderProxy() 中:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 const char * const kBinderProxyPathName = "android/os/BinderProxy" ;static int int_register_android_os_BinderProxy (JNIEnv* env) ... jclass clazz = FindClassOrDie (env, kBinderProxyPathName); gBinderProxyOffsets.mClass = MakeGlobalRefOrDie (env, clazz); gBinderProxyOffsets.mGetInstance = GetStaticMethodIDOrDie (env, clazz, "getInstance" , "(JJ)Landroid/os/BinderProxy;" ); ... gBinderProxyOffsets.mNativeData = GetFieldIDOrDie (env, clazz, "mNativeData" , "J" ); return RegisterMethodsOrDie ( env, kBinderProxyPathName, gBinderProxyMethods, NELEM (gBinderProxyMethods)); }

注意此处获取了 BinderProxy 的 mNativeData 的属性 ID 给了 gBinderProxyOffsets.mNativeData;

所以 env->CallStaticObjectMethod() 就是调用 BinderProxy.java 的 getInstance 方法:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 private static BinderProxy getInstance (long nativeData, long iBinder) { BinderProxy result; synchronized (sProxyMap) { try { result = sProxyMap.get(iBinder); if (result != null ) { return result; } result = new BinderProxy (nativeData); ... NoImagePreloadHolder.sRegistry.registerNativeAllocation(result, nativeData); sProxyMap.set(iBinder, result); return result; } private BinderProxy (long nativeData) { mNativeData = nativeData; } ... private final long mNativeData; }

BinderProxy 对象包含一个名为 sProxyMap 的 ProxyMap 对象,将 Native 层传入的 BpBinder 为 key,BinderProxy 为 value,存入这个 sProxyMap 对象中;

然后在 new BinderProxy(nativeData) 的时候,把从 Native 层传入的 nativeData 传给了 BinderProxy 对象的 mNativeData 变量,nativeData 是一个指向 BinderProxyNativeData 结构体变量的指针:

1 2 3 4 5 struct BinderProxyNativeData { sp<IBinder> mObject; sp<DeathRecipientList> mOrgue; };

结构体中的 mObject 就是 BpBinder,所以拿到了 BinderProxy 对象,就拿到了 BpBinder 对象;

最终 javaObjectForIBinder() 返回一个 BinderProxy 对象,此对象封装了 handle 值为 0 的 BpBinder(BpBinder 赋给了 BinderProxy.mNativeData),即将 native 层的 binder 对象(BpBinder)封装成 Java 层的 binder 对象(BinderProxy)并返回给调用者;

1.1.2 Binder.allowBlocking() 1 2 3 4 5 6 7 8 9 10 11 12 13 public static IBinder allowBlocking (IBinder binder) { try { if (binder instanceof BinderProxy) { ((BinderProxy) binder).mWarnOnBlocking = false ; } else if (binder != null && binder.getInterfaceDescriptor() != null && binder.queryLocalInterface(binder.getInterfaceDescriptor()) == null ) { Log.w(TAG, "Unable to allow blocking on interface " + binder); } } catch (RemoteException ignored) { } return binder; }

传入的参数是 BinderProxy,所以直接 ((BinderProxy) binder).mWarnOnBlocking = false;,仍然返回 BinderProxy 对象;

所以 Binder.allowBlocking(BinderInternal.getContextObject()) 最终返回一个封装了 BpBinder(handle == 0) 的 BinderProxy 对象。

1.1.3 ServiceManagerNative.asInterface() - 以 BinderProxy 为参数构造 SMP 1 2 3 4 5 6 7 8 9 10 11 public final class ServiceManagerNative { private ServiceManagerNative () {} ... public static IServiceManager asInterface (IBinder obj) { if (obj == null ) { return null ; } return new ServiceManagerProxy (obj); }

这里返回了一个参数为 BinderProxy 对象的 ServiceManagerProxy 对象;

1 2 3 4 5 6 7 8 9 class ServiceManagerProxy implements IServiceManager { public ServiceManagerProxy (IBinder remote) { mRemote = remote; mServiceManager = IServiceManager.Stub.asInterface(remote); } ... private IServiceManager mServiceManager; }

在 SMP 的构造函数中,传递 BinderProxy 对象,并把其赋值给 mRemote,再通过 IServiceManager.Stub.asInterface 初始化 mServiceManager 对象。

IServiceManager 是一个 AIDL 文件,在源码编译的时候会将其转换为 Java 和 C++ 代码,在生成的 IServiceManager.java 文件中有一个 Stub 类,其中的IServiceManager.Stub.asInterface 函数实现返回的是 Stub 的内部类 Proxy 对象,Proxy 类实现了 IServiceManager,Proxy 对象是 IServiceManager 的客户端,所以此处返回的 mServiceManager 对象也相当于是这个 Proxy 对象,是 IServiceManager 的客户端,而传入的 BinderProxy 参数是服务端 ,当调用 mServiceManager 对应的函数时,会先调用 AIDL 生成的 IServiceManager.java

所以 getIServiceManager() 就是获取 ServiceManagerProxy 对象,参数是封装了 BpBinder(handle == 0) 的 BinderProxy。

1.2 addService() 再来看 getIServiceManager().addService(),调用的就是 ServiceManagerProxy.addService():

1 2 3 4 5 6 7 8 9 10 11 class ServiceManagerProxy implements IServiceManager { public ServiceManagerProxy (IBinder remote) { mRemote = remote; mServiceManager = IServiceManager.Stub.asInterface(remote); } ... public void addService (String name, IBinder service, boolean allowIsolated, int dumpPriority) throws RemoteException { mServiceManager.addService(name, service, allowIsolated, dumpPriority); }

这里又调用了 mServiceManager.addService(name, service, allowIsolated, dumpPriority),前述 [1.1.3](#1.1.3 ServiceManagerNative.asInterface()) 小节已经分析得知,mServiceManager 作为客户端,调用 IServiceManager.java 中 Proxy 的 addService() 方法 IServiceManager.Stub.Proxy.addService():

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 @Override public void addService (java.lang.String name, android.os.IBinder service, boolean allowIsolated, int dumpPriority) throws android.os.RemoteException { android.os.Parcel _data = android.os.Parcel.obtain(); android.os.Parcel _reply = android.os.Parcel.obtain(); try { _data.writeInterfaceToken(DESCRIPTOR); _data.writeString(name); _data.writeStrongBinder(service); _data.writeBoolean(allowIsolated); _data.writeInt(dumpPriority); boolean _status = mRemote.transact(Stub.TRANSACTION_addService, _data, _reply, 0 ); _reply.readException(); } finally { _reply.recycle(); _data.recycle(); } }

这里的 _data 是一个 Parcel 对象,writeString(name) 和 writeStrongBinder(service) 最终通过 JNI 调用到 frameworks/base/core/jni/android_os_Parcel.cpp 中的 android_os_Parcel_writeString16() 和 android_os_Parcel_writeStrongBinder() -> Parcel.writeStrongBinder() -> flattenBinder() -> writeObject(),最终结果就是 data.mData data.mObjects

这里 mRemote 是 BinderProxy,就会调用服务端 BinderProxy 的 transact() 函数(注意:调用服务端的 transact 的时候,客户端会挂起等待),name, service 等参数会打包到 data 参数中:

1 2 3 4 5 6 7 8 9 public boolean transact (int code, Parcel data, Parcel reply, int flags) throws RemoteException { ... try { return transactNative(code, data, reply, flags); } ... } ... public native boolean transactNative (int code, Parcel data, Parcel reply, int flags) throws RemoteException;

传入的 code 为 ADD_SERVICE_TRANSACTION ,传入的 flags 默认为 0,这里调用到了 transactNative 这个 native 方法,在 android_util_Binder.cpp 的 gBinderProxyMethods 中可以看出,

1 2 3 4 5 6 7 8 9 10 11 12 static const JNINativeMethod gBinderProxyMethods[] = { {"pingBinder" , "()Z" , (void *)android_os_BinderProxy_pingBinder}, {"isBinderAlive" , "()Z" , (void *)android_os_BinderProxy_isBinderAlive}, {"getInterfaceDescriptor" , "()Ljava/lang/String;" , (void *)android_os_BinderProxy_getInterfaceDescriptor}, {"transactNative" , "(ILandroid/os/Parcel;Landroid/os/Parcel;I)Z" , (void *)android_os_BinderProxy_transact}, {"linkToDeath" , "(Landroid/os/IBinder$DeathRecipient;I)V" , (void *)android_os_BinderProxy_linkToDeath}, {"unlinkToDeath" , "(Landroid/os/IBinder$DeathRecipient;I)Z" , (void *)android_os_BinderProxy_unlinkToDeath}, {"getNativeFinalizer" , "()J" , (void *)android_os_BinderProxy_getNativeFinalizer}, {"getExtension" , "()Landroid/os/IBinder;" , (void *)android_os_BinderProxy_getExtension}, };

实际调用的是 android_os_BinderProxy_transact 方法:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 static jboolean android_os_BinderProxy_transact (JNIEnv* env, jobject obj, jint code, jobject dataObj, jobject replyObj, jint flags) ... Parcel* data = parcelForJavaObject (env, dataObj); ... Parcel* reply = parcelForJavaObject (env, replyObj); ... IBinder* target = getBPNativeData (env, obj)->mObject.get (); ... status_t err = target->transact (code, *data, reply, flags); ... if (err == NO_ERROR) { return JNI_TRUE; } else if (err == UNKNOWN_TRANSACTION) { return JNI_FALSE; } ... return JNI_FALSE; }

首先拿到传入的 data,然后调用 IBinder* target = getBPNativeData(env, obj)->mObject.get():

1.2.1 BpBinder->transact() getBPNativeData(env, obj)->mObject.get()

1 2 3 BinderProxyNativeData* getBPNativeData (JNIEnv* env, jobject obj) { return (BinderProxyNativeData *) env->GetLongField (obj, gBinderProxyOffsets.mNativeData); }

上述 [1.1.1](# 1.1.1 BinderInternal.getContextObject()) 小节中分析得知 gBinderProxyOffsets.mNativeData 存入的是 BinderProxy 的 mNativeData 属性 ID,所以此处 getBPNativeData 获取的就是指向 BinderProxyNativeData 结构体的指针,从 [1.1.1](# 1.1.1 BinderInternal.getContextObject()) 小节的 javaObjectForIBinder() 函数得知,这个结构体的 mObject就是 BpBinder 对象,所以后面的 target->transact() 则是调用 BpBinder->transact()。

BpBinder->transact()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 status_t BpBinder::transact ( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) ... status_t status; if (CC_UNLIKELY (isRpcBinder ())) { status = rpcSession ()->transact (rpcAddress (), code, data, reply, flags); } else { status = IPCThreadState::self ()->transact (binderHandle (), code, data, reply, flags); } return status; } ... }

CC_UNLICKLY 意思是告诉编译器执行 else 语句的可能性更大,减少性能的下降;这里又调用了 IPCThreadState 的 transact() 方法。

1.2.2 IPCThreadState::self()->transact() 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 status_t IPCThreadState::transact (int32_t handle, uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) ... flags |= TF_ACCEPT_FDS; ... err = writeTransactionData (BC_TRANSACTION, flags, handle, code, data, nullptr ); ... if ((flags & TF_ONE_WAY) == 0 ) { ... if (reply) { err = waitForResponse (reply); } else { Parcel fakeReply; err = waitForResponse (&fakeReply); } ... IF_LOG_TRANSACTIONS () { TextOutput::Bundle _b(alog); alog << "BR_REPLY thr " << (void *)pthread_self () << " / hand " << handle << ": " ; if (reply) alog << indent << *reply << dedent << endl; else alog << "(none requested)" << endl; } } else { err = waitForResponse (nullptr , nullptr ); } return err; }

这里的 TF_ACCETP_FDS 表示允许回复中包含文件描述符,非异步,异步是 TF_ONEWAY,随后主要有两个函数比较重要:

writeTransactionData():将数据打包到 mOut 中,准备写入到 binder 驱动

waitForResponse():实际执行写入到 binder 驱动

1.2.2.1 writeTransactionData - 打包数据和命令到 mOut writeTransactionData()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 status_t IPCThreadState::writeTransactionData (int32_t cmd, uint32_t binderFlags, int32_t handle, uint32_t code, const Parcel& data, status_t * statusBuffer) binder_transaction_data tr; tr.target.ptr = 0 ; tr.target.handle = handle; tr.code = code; tr.flags = binderFlags; tr.cookie = 0 ; tr.sender_pid = 0 ; tr.sender_euid = 0 ; const status_t err = data.errorCheck (); if (err == NO_ERROR) { tr.data_size = data.ipcDataSize (); tr.data.ptr.buffer = data.ipcData (); tr.offsets_size = data.ipcObjectsCount ()*sizeof (binder_size_t ); tr.data.ptr.offsets = data.ipcObjects (); } ... mOut.writeInt32 (cmd); mOut.write (&tr, sizeof (tr)); return NO_ERROR; } uintptr_t Parcel::ipcData () const return reinterpret_cast <uintptr_t >(mData); } uintptr_t Parcel::ipcObjects () const return reinterpret_cast <uintptr_t >(mObjects); } status_t Parcel::writeObject (const flat_binder_object& val, bool nullMetaData) *reinterpret_cast <flat_binder_object*>(mData+mDataPos) = val; if (nullMetaData || val.binder != 0 ) { mObjects[mObjectsSize] = mDataPos; acquire_object (ProcessState::self (), val, this , &mOpenAshmemSize); mObjectsSize++; }

这里的 tr.data.ptr.buffer(就是 mData) 和 tr.data.ptr.offsets(就是mObjects) 存储的都是地址,buffer 指的是数据区的首地址,存放传输的数据(包括 binder 对象);offsets 指的是偏移数组的首地址,用来描述数据区中每一个 IPC 对象(flat_binder_object)在数据区 buffer 中的位置,数组的每一项为一个 binder_size_t(其实就是 unsigned int 或者 unsigned long),这个值对应每一个 IPC 对象在 buffer 中相对于 mData 的偏移地址(理解为数组下标);

这里的 binder_transaction_data tr,从名称上看就知道实际上就是要传递的数据,不过真正要传递的数据是 tr.data.ptr.buffer,传入的 cmd 参数是 BC_TRANSACTION,然后先后把这个 cmd 和传递的数据 tr 写入 mOut 中(这样当跳过 cmd 地址后就是数据 tr 的地址了),在后面 talkWithDriver() 中会把这个 mOut.data(指针值) 赋值给 binder_write_read.write_buffer 从而传递到驱动层。

BC 就是 Binder Command,是向驱动发送的命令,BR 就是 Binder Return,是从驱动返回的命令;

mIn 和 mOut 都是 IPCThreadState 中的 Parcel 对象,定义在 IPCThreadState.h 中:

1 2 3 Parcel mIn; Parcel mOut;

1.2.2.2 waitForResponse - 写入数据到 binder 驱动 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 status_t waitForResponse (Parcel *reply, status_t *acquireResult=nullptr ) status_t IPCThreadState::waitForResponse (Parcel *reply, status_t *acquireResult) uint32_t cmd; int32_t err; while (1 ) { if ((err=talkWithDriver ()) < NO_ERROR) break ; err = mIn.errorCheck (); ... cmd = (uint32_t )mIn.readInt32 (); ... switch (cmd) { ... case BR_TRANSACTION_COMPLETE: if (!reply && !acquireResult) goto finish; break ; ... default : err = executeCommand (cmd); if (err != NO_ERROR) goto finish; break ; } } ... return err; }

waitForResponse() 主要做了两件事:

向 binder 驱动中写入数据:waitForResponse() 没有直接去执行写入数据到 binder 驱动,而是调用了 talkWithDriver() 去处理;

处理从 binder 驱动发送过来的命令:比如 BR_TRANSACTION_COMPLETE, BR_REPLY;

1.2.2.2.1 talkWithDriver() - 写入数据到 binder 驱动并把驱动返回数据放入 mIn 中 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 status_t talkWithDriver (bool doReceive=true ) status_t IPCThreadState::talkWithDriver (bool doReceive) ... binder_write_read bwr; const bool needRead = mIn.dataPosition () >= mIn.dataSize (); const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize () : 0 ; bwr.write_size = outAvail; bwr.write_buffer = (uintptr_t )mOut.data (); if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity (); bwr.read_buffer = (uintptr_t )mIn.data (); } else { bwr.read_size = 0 ; bwr.read_buffer = 0 ; } ... if ((bwr.write_size == 0 ) && (bwr.read_size == 0 )) return NO_ERROR; bwr.write_consumed = 0 ; bwr.read_consumed = 0 ; status_t err; do { ... if (ioctl (mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0 ) err = NO_ERROR; else err = -errno; ... } while (err == -EINTR); ...

talkWithDriver() 在 IPCThreadState.h 中定义的时候,doReceive 参数默认值为 true,在 waitForResponse() 中调用 talkWithDriver() 时没有传入参数,所以这里的 doReceive 为 true;mIn 还没有写入数据,因此值为初始值,那么 mIn.dataPosition() 返回 mDataPos,值为 0,mIn.dataSize() 返回 mDataSize,初始值也为 0,因此 needRead 为 true,bwr.read_size 则设置为 256,

talkWithDriver() 主要做了两个工作:

准备 binder_write_read 数据,通过 ioctl 进入驱动,执行驱动层的 binder_ioctl(),binder_ioctl_write_read(),执行了 binder_thread_write() 写入数据,随后又执行了 binder_thread_read() 函数把 BR_NOOP 和 BR_TRANSACTION 两个命令写入用户空间(具体流程可以看 Android_Binder进程间通信机制01 的驱动层讲解);

处理驱动的返回数据,放入 mIn 中供后续处理;

binder_ioctl_write_read()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 static int binder_ioctl_write_read (struct file *filp, unsigned int cmd, unsigned long arg, struct binder_thread *thread) { int ret = 0 ; struct binder_proc *proc = unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; struct binder_write_read bwr ; ... if (copy_from_user(&bwr, ubuf, sizeof (bwr))) { ret = -EFAULT; goto out; } ... if (bwr.write_size > 0 ) { ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed); trace_binder_write_done(ret); ... } ... }

此处的 copy_from_user() 并非 binder 一次拷贝的地方,因为此处虽然是把用户空间 bwr 的数据拷贝到了内核 bwr 的地址,但是真实的传输数据是 bwr.write_buffer,bwr.write_buffer 是 mOut.data(),mOut.data() 返回的是一个地址值,所以此处拷贝的只是一个地址,并非真实传输的数据。

a. binder_thread_write() - 找到目标进程 sm 并向其传递传输数据,唤醒 sm 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 static int binder_thread_write (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { uint32_t cmd; struct binder_context *context = void __user *buffer = (void __user *)(uintptr_t )binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; while (ptr < end && thread->return_error.cmd == BR_OK) { int ret; if (get_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); switch (cmd) { ... case BC_TRANSACTION: case BC_REPLY: { struct binder_transaction_data tr ; if (copy_from_user(&tr, ptr, sizeof (tr))) return -EFAULT; ptr += sizeof (tr); binder_transaction(proc, thread, &tr, cmd == BC_REPLY, 0 ); break ; } ... *consumed = ptr - buffer;

由小节 [1.2.2](#1.2.2 IPCThreadState::self()->transact()) 中得知,传递给 writeTransactionData() 函数的 cmd 是 BC_TRANSACTION,所以进入 BC_TRANSACTION 这个 case,调用 binder_transaction 方法,并且第四个参数 cmd == BC_REPLY 为 false。

binder_transaction

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 static void binder_transaction (struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply, binder_size_t extra_buffers_size) int ret; struct binder_transaction *t; struct binder_work *w; struct binder_work *tcomplete; ... struct binder_proc *target_proc = NULL ; struct binder_thread *target_thread = NULL ; struct binder_node *target_node = NULL ; ... struct binder_context *context = proc->context; ... if (reply) { ... } else { if (tr->target.handle) { ... } else { ... target_node = context->binder_context_mgr_node; if (target_node) target_node = binder_get_node_refs_for_txn ( target_node, &target_proc, &return_error); ... } ... w = list_first_entry_or_null (&thread->todo, struct binder_work, entry); ... if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) { ... } binder_inner_proc_unlock (proc); } ... t = kzalloc (sizeof (*t), GFP_KERNEL); ... tcomplete = kzalloc (sizeof (*tcomplete), GFP_KERNEL); ... if (!reply && !(tr->flags & TF_ONE_WAY)) t->from = thread; ... t->sender_euid = task_euid (proc->tsk); t->to_proc = target_proc; t->to_thread = target_thread; t->code = tr->code; t->flags = tr->flags; ... t->buffer = binder_alloc_new_buf (&target_proc->alloc, tr->data_size, tr->offsets_size, extra_buffers_size, !reply && (t->flags & TF_ONE_WAY), current->tgid); ... t->buffer->debug_id = t->debug_id; t->buffer->transaction = t; t->buffer->target_node = target_node; t->buffer->clear_on_free = !!(t->flags & TF_CLEAR_BUF); trace_binder_transaction_alloc_buf (t->buffer); if (binder_alloc_copy_user_to_buffer ( &target_proc->alloc, t->buffer, 0 , (const void __user *) (uintptr_t )tr->data.ptr.buffer, tr->data_size)) { ... } if (binder_alloc_copy_user_to_buffer ( &target_proc->alloc, t->buffer, ALIGN (tr->data_size, sizeof (void *)), (const void __user *) (uintptr_t )tr->data.ptr.offsets, tr->offsets_size)) { ... } ... for (buffer_offset = off_start_offset; buffer_offset < off_end_offset; buffer_offset += sizeof (binder_size_t )) { ... if (t->buffer->oneway_spam_suspect) tcomplete->type = BINDER_WORK_TRANSACTION_ONEWAY_SPAM_SUSPECT; else tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; t->work.type = BINDER_WORK_TRANSACTION; if (reply) { ... } else if (!(t->flags & TF_ONE_WAY)) { ... binder_enqueue_deferred_thread_work_ilocked (thread, tcomplete); t->need_reply = 1 ; t->from_parent = thread->transaction_stack; thread->transaction_stack = t; binder_inner_proc_unlock (proc); return_error = binder_proc_transaction (t, target_proc, target_thread); if (return_error) { binder_inner_proc_lock (proc); binder_pop_transaction_ilocked (thread, t); binder_inner_proc_unlock (proc); goto err_dead_proc_or_thread; } }

调用 binder_proc_transaction() 向 sm 发送 BINDER_WORK_TRANSACTION 并将 sm 唤醒:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 static int binder_proc_transaction (struct binder_transaction *t, struct binder_proc *proc, struct binder_thread *thread) struct binder_node *node = t->buffer->target_node; struct binder_priority node_prio; bool oneway = !!(t->flags & TF_ONE_WAY); bool pending_async = false ; bool skip = false ; ... if (oneway) { ... } ... if (!thread && !pending_async && !skip) thread = binder_select_thread_ilocked (proc); ... if (thread) { ... binder_enqueue_thread_work_ilocked (thread, &t->work); } else if (!pending_async) { binder_enqueue_work_ilocked (&t->work, &proc->todo); ... if (!pending_async) binder_wakeup_thread_ilocked (proc, thread, !oneway ); ... return 0 ; } static void binder_enqueue_thread_work_ilocked (struct binder_thread *thread, struct binder_work *work) WARN_ON (!list_empty (&thread->waiting_thread_node)); binder_enqueue_work_ilocked (work, &thread->todo); thread->process_todo = true ; } static void binder_wakeup_thread_ilocked (struct binder_proc *proc, struct binder_thread *thread, bool sync) assert_spin_locked (&proc->inner_lock); if (thread) { trace_android_vh_binder_wakeup_ilocked (thread->task, sync, proc); if (sync) wake_up_interruptible_sync (&thread->wait); else wake_up_interruptible (&thread->wait); return ; }

这里要注意:binder_proc_transaction() -> binder_enqueue_thread_work_ilocked() -> binder_enqueue_thread_work_ilocked(), 在最后一步的时候配置了 process_todo = true,这里的作用是在后面进入 binder_thread_read() 的时候线程不休眠 wake_up_interruptible_sync() 把 sm 唤醒,异步则调用 wake_up_interruptible()。

binder_transaction() 主要工作:

获取 target_node,target_proc

拷贝数据到内核和目标进程映射的物理内存空间

binder_transaction_binder 转换成 binder_transaction_handle(这里判断是 BINDER_TYPE_BINDER 还是 BINDER_TYPE_HANDLE 是在 transact 之前的 writeStrongBinder() 数据序列化的时候处理的,因为我们传入在 addService 的是 ams 的服务端,所以 binder.localBinder() 不为空,所以传入的是 BBinder,那么就是 BINDER_TYPE_BINDER )

保存 thread ->transaction_stack 方便 sm 找到客户端

t->work.type = BINDER_WORK_TRANSACTION,发送到 sm 让其工作tcomplete-type = BINDER_WORK_TRANSACTION_COMPLETE,发送给 clientwake_up_interruptible_sync() 唤醒 sm

为什么这里拷贝之前要通过 binder_alloc_new_buf() 申请内存呢?因为在 binder_mmap() 的时候虽然映射了 1M-8K 的虚拟内存,但却只申请了 1页(4K) 的物理页面,等到实际使用时再动态申请,也就是说在 binder_ioctl() 实际传输数据的时候再通过 binder_alloc_new_buf() 方法去申请物理内存。

自此已经将要传输的数据拷贝到目标进程,目标进程可以直接读取到了,目标 sm 进程被唤醒,~~~在此之前 sm 是阻塞在 binder_thread_read() 中的~~~,接下来还有三件事要做:

其中前两步没有时序上的限制,而是并行处理的,先来看看客户端调用线程。

binder_transaction 执行完后,那么 binder_thread_write 也就执行完了,返回到 binder_ioctl_write_read() 中继续执行:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 static int binder_ioctl_write_read (struct file *filp, unsigned int cmd, unsigned long arg, struct binder_thread *thread) { ... if (bwr.read_size > 0 ) { ret = binder_thread_read(proc, thread, bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK); ... if (!binder_worklist_empty_ilocked(&proc->todo)) binder_wakeup_proc_ilocked(proc); ... } ...

因为 bwr.read_size > 0,所以接着执行 binder_thread_read() 方法。

b. binder_thread_read() - 客户端进程挂起 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 static int binder_thread_read (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { void __user *buffer = (void __user *)(uintptr_t )binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; int ret = 0 ; int wait_for_proc_work; if (*consumed == 0 ) { if (put_user(BR_NOOP, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); } ... wait_for_proc_work = binder_available_for_proc_work_ilocked(thread); ... if (wait_for_proc_work) { ... } if (non_block) { if (!binder_has_work(thread, wait_for_proc_work)) ret = -EAGAIN; } else { ret = binder_wait_for_work(thread, wait_for_proc_work); } thread->looper &= ~BINDER_LOOPER_STATE_WAITING; ... } static bool binder_available_for_proc_work_ilocked (struct binder_thread *thread) { return !thread->transaction_stack && binder_worklist_empty_ilocked(&thread->todo) && (thread->looper & (BINDER_LOOPER_STATE_ENTERED | BINDER_LOOPER_STATE_REGISTERED)); }

consumed 就是用户空间的 bwr.read_consumed,此时值为 0,把 BR_NOOP 传递到了用户空间地址 ptr 中。

在 binder_transaction() 中将 server 端要处理的 transaction 记录到了当前调用线程 thread->transaction_stack = t;,所以 thread->transaction_stack != NULL,而且将 tcomplete 加入到当前调用线程待处理的任务队列 &thread->todo,所以 &thread->todo 也不为空,wait_for_proc_work 为 false,non_block 也为 false,进入 binder_wait_for_work():

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 static int binder_wait_for_work (struct binder_thread *thread, bool do_proc_work) { DEFINE_WAIT(wait); ... for (;;) { prepare_to_wait(&thread->wait, &wait, TASK_INTERRUPTIBLE); if (binder_has_work_ilocked(thread, do_proc_work)) break ; if (do_proc_work) list_add(&thread->waiting_thread_node, &proc->waiting_threads); ... schedule(); ... finish_wait(&thread->wait, &wait); ... }

最终还是在 binder_wait_for_work() 里面阻塞了,客户端进程挂起。接下来继续看 sm 做了什么。

c. 服务端进程处理数据 c.1 sm 调用 handleEvent() 去读取消息 sm 通过 epoll 机制对 binder_fd 进行监听,当监听到 binder_fd 可读时就会调用 handleEvent() 处理。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 class BinderCallback : public LooperCallback {public : static sp<BinderCallback> setupTo (const sp<Looper>& looper) ... int ret = looper->addFd (binder_fd, Looper::POLL_CALLBACK, Looper::EVENT_INPUT, cb, nullptr ); return cb; } int handleEvent (int , int , void * ) override IPCThreadState::self ()->handlePolledCommands (); return 1 ; }

调用 handlePolledCommands()

1 2 3 4 5 6 7 8 9 10 11 12 13 status_t IPCThreadState::handlePolledCommands () status_t result; do { result = getAndExecuteCommand (); } while (mIn.dataPosition () < mIn.dataSize ()); processPendingDerefs (); flushCommands (); return result; }

handlePolledCommands() 是告诉 sm,binder 驱动有数据可读,调用 getAndExecuteCommand()

1 2 3 4 5 6 7 8 9 status_t IPCThreadState::getAndExecuteCommand () status_t result; int32_t cmd; result = talkWithDriver (); ... }

看到熟悉的 talkWithDriver()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 status_t IPCThreadState::talkWithDriver (bool doReceive) ... const bool needRead = mIn.dataPosition () >= mIn.dataSize (); const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize () : 0 ; bwr.write_size = outAvail; ... if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity (); bwr.read_buffer = (uintptr_t )mIn.data (); } else { ...

sm 启动时,mIn .dataSize() = 0,mOut.dataSize() = 0,所以这里 needRead 为 true,bwr.read_size = 256(默认值),bwr.write_size = 0,会进入驱动中进行读操作,sm 在 binder_thread_read() 里面的 binder_wait_for_work() 进入休眠。

此时 mIn 和 mOut 都没有数据,则 needRead 为 true,bwr.read_size = 256,bwr.write_size = 0,进入 binder_thread_read();

c.2 处理 BINDER_WORK_TRANSACTION,向用户空间传递 BR_TRANCACTION 回忆一下在上面 binder_transaction() 的时候,我们配置了目标线程的 thread->process_todo = true,所以此时 sm 在 binder_wait_for_work() 中 sm 不会休眠

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 static int binder_thread_read ( ... } else { ret = binder_wait_for_work(thread, wait_for_proc_work); } thread->looper &= ~BINDER_LOOPER_STATE_WAITING; while (1 ) { uint32_t cmd; struct binder_transaction_data_secctx tr; struct binder_transaction_data *trd = &tr.transaction_data; struct binder_work *w = NULL ; struct list_head *list = NULL ; struct binder_transaction *t = NULL ; struct binder_thread *t_from; size_t trsize = (*trd); ... if (!binder_worklist_empty_ilocked(&thread->todo)) list = &thread->todo; else if (!binder_worklist_empty_ilocked(&proc->todo) && wait_for_proc_work) list = &proc->todo; ... w = binder_dequeue_work_head_ilocked(list ); ... switch (w->type) { case BINDER_WORK_TRANSACTION: { binder_inner_proc_unlock(proc); t = container_of(w, struct binder_transaction, work); } break ; ... if (t->buffer->target_node) { struct binder_node *target_node = t->buffer->target_node; struct binder_priority node_prio; trd->target.ptr = target_node->ptr; trd->cookie = target_node->cookie; node_prio.sched_policy = target_node->sched_policy; node_prio.prio = target_node->min_priority; binder_transaction_priority(current, t, node_prio, target_node->inherit_rt); cmd = BR_TRANSACTION; } else { trd->target.ptr = 0 ; trd->cookie = 0 ; cmd = BR_REPLY; } trd->code = t->code; trd->flags = t->flags; trd->sender_euid = from_kuid(current_user_ns(), t->sender_euid); t_from = binder_get_txn_from(t); if (t_from) { struct task_struct *sender = t_from->proc->tsk; trd->sender_pid = task_tgid_nr_ns(sender, task_active_pid_ns(current)); trace_android_vh_sync_txn_recvd(thread->task, t_from->task); } else { trd->sender_pid = 0 ; } ... trd->data_size = t->buffer->data_size; trd->offsets_size = t->buffer->offsets_size; trd->data.ptr.buffer = (uintptr_t )t->buffer->user_data; trd->data.ptr.offsets = trd->data.ptr.buffer + ALIGN(t->buffer->data_size, sizeof (void *)); tr.secctx = t->security_ctx; if (t->security_ctx) { cmd = BR_TRANSACTION_SEC_CTX; trsize = sizeof (tr); } if (put_user(cmd, (uint32_t __user *)ptr)) { if (t_from) binder_thread_dec_tmpref(t_from); binder_cleanup_transaction(t, "put_user failed" , BR_FAILED_REPLY); return -EFAULT; } ptr += sizeof (uint32_t ); if (copy_to_user(ptr, &tr, trsize)) { if (t_from) binder_thread_dec_tmpref(t_from); binder_cleanup_transaction(t, "copy_to_user failed" , BR_FAILED_REPLY); return -EFAULT; } ptr += trsize;

主要工作有:

获取 sm 的 thread->todo 队列;

从 thread->todo 队列获取 binder_work 对象 w;

根据 w->type(BINDER_WORK_TRANSACTION) ,通过 w 获取传输数据 binder_transaction 对象 t;

记录命令 TR_TRANSACTION;

把 t 中的数据放入 binder_transaction_data trd 中,而 trd 是 binder_transaction_data_secctx tr 的一个属性,指向 binder_transaction_data;

把 Binder 实体的地址赋值给 trd->target.ptr = target_node->ptr;,这里的 target_node 是 sm 的 binder_node,target_node->ptr 指向的是 binder 实体在宿主进程中的首地址,sm 在注册为大管家的时候在 binder_init_node_ilocked() 通过 binder_uintptr_t ptr = fp ? fp->binder : 0 配置了 ptr 为 0,所以此处的 target_node->ptr 其实为 0,cookie 也为 0 tr.target.ptr = 0,但是在 binder_transaction() 中并未将 tr.target.ptr 赋值给 target_node->ptr;

把 TR_TRANSACTION 和 tr 传递到用户空间 ptr 地址中;

binder_thread_read() 执行完后回到 sm 进程用户空间。

c.3 处理 BR_TRANCACTION 先返回到 talkWithDriver() ,在其中后续未做重要的工作,再返回到 getAndExecuteCommand() 获取驱动发来的 TR_TRANSACTION 命令:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 status_t IPCThreadState::getAndExecuteCommand () ... result = talkWithDriver (); if (result >= NO_ERROR) { size_t IN = mIn.dataAvail (); if (IN < sizeof (int32_t )) return result; cmd = mIn.readInt32 (); ... result = executeCommand (cmd); ... return result; }

把前面 binder_transaction() 中传递到用户空间的 TR_TRANSACTION 命令取出来,调用 executeCommand(cmd):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 status_t IPCThreadState::executeCommand (int32_t cmd) BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t )cmd) { ... case BR_TRANSACTION: { ... if (cmd == (int ) BR_TRANSACTION_SEC_CTX) { result = mIn.read (&tr_secctx, sizeof (tr_secctx)); } else { result = mIn.read (&tr, sizeof (tr)); tr_secctx.secctx = 0 ; } ... Parcel reply; ... if (tr.target.ptr) { if (reinterpret_cast <RefBase::weakref_type*>( tr.target.ptr)->attemptIncStrong (this )) { error = reinterpret_cast <BBinder*>(tr.cookie)->transact (tr.code, buffer, &reply, tr.flags); reinterpret_cast <BBinder*>(tr.cookie)->decStrong (this ); } else { error = UNKNOWN_TRANSACTION; } } else { error = the_context_object->transact (tr.code, buffer, &reply, tr.flags); }

这个 tr.target.ptr 指向的是 binder 实体(binder_node)在宿主进程的首地址,由驱动在写回数据时赋值的(binder_thread_read() 中),但是此时进程是 sm,sm 在注册为大管家的时候,在 binder_init_node_ilocked() 通过 binder_uintptr_t ptr = fp ? fp->binder : 0 配置了 ptr 为 0(**具体见 binder.c -> binder_ioctl_set_ctx_mgr()**),所以 sm 进程在 binder_thread_read() 中写回数据时写的是个 0 值,而别的 iBinder 对象则会有值,所以此时进入 else 分支;

c.4 服务端处理 IPC 数据 - trancact->onTrancact-> TRANSACTION_addService the_context_object 是一个 BBinder 对象,在 sm 启动时(main.cpp)传入的是 sm 对象,sm 继承了 BnServiceManager,BnServiceManager 继承 BnInterface,而 BnInterface 又继承了 BBinder & IServiceManager;

1 2 3 4 5 class ServiceManager :class BnServiceManager :class BnInterface :

所以 the_context_object->transact() 最终执行的是 BBinder::transact()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 status_t BBinder::transact ( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) data.setDataPosition (0 ); ... status_t err = NO_ERROR; switch (code) { ... default : err = onTransact (code, data, reply, flags); break ; } ... }

进入 default 分支,这里的 onTransact() 调用的是 JavaBBinder 中的 onTransact(),

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 status_t onTransact ( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0 ) override { ... jboolean res = env->CallBooleanMethod (mObject, gBinderOffsets.mExecTransact, code, reinterpret_cast <jlong>(&data), reinterpret_cast <jlong>(reply), flags); if (env->ExceptionCheck ()) { ScopedLocalRef<jthrowable> excep (env, env->ExceptionOccurred()) ; binder_report_exception (env, excep.get (), "*** Uncaught remote exception! " "(Exceptions are not yet supported across processes.)" ); res = JNI_FALSE; }

又通过 CallBooleanMethod() 继续调用 Binder.java 的 execTransact() 方法:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 private boolean execTransact (int code, long dataObj, long replyObj, int flags) { final int callingUid = Binder.getCallingUid(); final long origWorkSource = ThreadLocalWorkSource.setUid(callingUid); try { return execTransactInternal(code, dataObj, replyObj, flags, callingUid); } finally { ThreadLocalWorkSource.restore(origWorkSource); } }

继续到 execTransactInternal():

1 2 3 4 5 private boolean execTransactInternal (int code, long dataObj, long replyObj, int flags, int callingUid) { ... res = onTransact(code, data, reply, flags);

然后这里的 onTransact() 就是调用 IServiceManager.java 中的 BnServiceManager::onTransact() ,BnServiceManager 继承了 BBinder,重写了 BBinder 中 onTransact() 这个虚函数,在 AIDL 生成的 IServiceManager.cpp 文件中(这部分调用流程还存在疑惑, 此文解释了为什么会调用到 JavaBBinder.onTransact(),学习 java 层 Binder 对象的初始过程

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 ::android::status_t BnServiceManager::onTransact (uint32_t _aidl_code, const ::android::Parcel& _aidl_data, ::android:: Parcel* _aidl_reply, uint32_t _aidl_flags) ::android::status_t _aidl_ret_status = ::android::OK; switch (_aidl_code) { ... case BnServiceManager::TRANSACTION_addService: { ... ::android::binder::Status _aidl_status(addService (in_name, in_service, in_allowIsolated, in_dumpPriority)); _aidl_ret_status = _aidl_status.writeToParcel (_aidl_reply); if (((_aidl_ret_status) != (::android::OK))) { break ; } if (!_aidl_status.isOk ()) { break ; } } break ;

addService 返回一个 Status 对象状态值,写到 Parcel 对象 _aidl_reply 中,上面说 ServiceManager 间接继承了 IServiceManager,同时也实现了 addService() 这个虚函数:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 ServiceMap mNameToService; Status ServiceManager::addService (const std::string& name, const sp<IBinder>& binder, bool allowIsolated, int32_t dumpPriority) { auto ctx = mAccess->getCallingContext (); ... mNameToService[name] = Service { .binder = binder, .allowIsolated = allowIsolated, .dumpPriority = dumpPriority, .debugPid = ctx.debugPid, }; ... return Status::ok (); }

sm 通过 nNameToService 这个 map 保存服务及其对应的信息,服务名 name 为 key,value 是一个 Service 结构体;Status::ok() 返回 Status 的默认构造函数 Status()。

到这里 sm 就保存了服务和对应的 binder

c.5 sendReply() - 服务端向驱动写入 BC_REPLY 1 2 3 4 5 6 7 8 9 10 11 12 13 status_t IPCThreadState::executeCommand (int32_t cmd) ... } else { error = the_context_object->transact (tr.code, buffer, &reply, tr.flags); } if ((tr.flags & TF_ONE_WAY) == 0 ) { ... constexpr uint32_t kForwardReplyFlags = TF_CLEAR_BUF; sendReply (reply, (tr.flags & kForwardReplyFlags)); } else {

这里的 tr.flags 还是 0,进入 if 分支,调用 sendReply() 将 reply 发送给请求方客户端:

1 2 3 4 5 6 7 8 9 10 status_t IPCThreadState::sendReply (const Parcel& reply, uint32_t flags) status_t err; status_t statusBuffer; err = writeTransactionData (BC_REPLY, flags, -1 , 0 , reply, &statusBuffer); if (err < NO_ERROR) return err; return waitForResponse (nullptr , nullptr ); }

writeTransactionData() 参见 [1.2.2.1](# 1.2.2.1 writeTransactionData - 打包数据和命令到 mOut) 小结,打包 BC_REPLY talkWithDriver() 与驱动沟通,因为此时 mOut 有数据,mIn 中无数据,所以在 talkWithDriver() 时 write_size 和 read_size 都大于 0,通过 ioctl() 向驱动写入和读取数据:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 static int binder_thread_write (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { ... case BC_TRANSACTION: case BC_REPLY: { struct binder_transaction_data tr ; if (copy_from_user(&tr, ptr, sizeof (tr))) return -EFAULT; ptr += sizeof (tr); binder_transaction(proc, thread, &tr, cmd == BC_REPLY, 0 ); break ; }

此时的 cmd 为 BC_REPLY,所以 binder_transaction()的第四个参数为 true。

c.6 服务端处理 BC_REPLY,唤醒客户端 分别向自身和客户端 todo 队列添加 BINDER_WORK_TRANCACTION_COMPLETE 和 BINDER_WORK_TRANCACTION,然后唤醒客户端。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 static void binder_transaction (struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply, binder_size_t extra_buffers_size) { int ret; struct binder_transaction *t ; struct binder_work *w ; struct binder_work *tcomplete ; binder_size_t buffer_offset = 0 ; binder_size_t off_start_offset, off_end_offset; binder_size_t off_min; binder_size_t sg_buf_offset, sg_buf_end_offset; struct binder_proc *target_proc =NULL ; struct binder_thread *target_thread =NULL ; struct binder_node *target_node =NULL ; struct binder_transaction *in_reply_to =NULL ; struct binder_transaction_log_entry *e ; uint32_t return_error = 0 ; uint32_t return_error_param = 0 ; uint32_t return_error_line = 0 ; binder_size_t last_fixup_obj_off = 0 ; binder_size_t last_fixup_min_off = 0 ; struct binder_context *context = ... if (reply) { binder_inner_proc_lock(proc); in_reply_to = thread->transaction_stack; ... thread->transaction_stack = in_reply_to->to_parent; binder_inner_proc_unlock(proc); target_thread = binder_get_txn_from_and_acq_inner(in_reply_to); ... target_proc = target_thread->proc; target_proc->tmp_ref++; binder_inner_proc_unlock(target_thread->proc); ... } else ... ... t = kzalloc(sizeof (*t), GFP_KERNEL); ... tcomplete = kzalloc(sizeof (*tcomplete), GFP_KERNEL); ... t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size, ... t->buffer->target_node = target_node; ... if (binder_alloc_copy_user_to_buffer( ... } if (binder_alloc_copy_user_to_buffer( ... } ... if (t->buffer->oneway_spam_suspect) tcomplete->type = BINDER_WORK_TRANSACTION_ONEWAY_SPAM_SUSPECT; else tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; t->work.type = BINDER_WORK_TRANSACTION; if (reply) { binder_enqueue_thread_work(thread, tcomplete); binder_inner_proc_lock(target_proc); ... binder_enqueue_thread_work_ilocked(target_thread, &t->work); ... wake_up_interruptible_sync(&target_thread->wait); } else if (!(t->flags & TF_ONE_WAY)) { ... }

这里和前文的 binder_transaction() 分析一样,只不过是走了不同的分支,注意这里的 binder_enqueue_thread_work() 中会对当前调用线程配置 thread->process_todo = true;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 static int binder_thread_read (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { ... wait_for_proc_work = binder_available_for_proc_work_ilocked(thread); ... if (wait_for_proc_work) { ... } if (non_block) { ... } else { ret = binder_wait_for_work(thread, wait_for_proc_work); }

thread->todo 不为空,所以wait_for_proc_work 为 false,进入 binder_wait_for_work():

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 static int binder_wait_for_work (struct binder_thread *thread, bool do_proc_work) DEFINE_WAIT (wait); struct binder_proc *proc = thread->proc; int ret = 0 ; freezer_do_not_count (); binder_inner_proc_lock (proc); for (;;) { prepare_to_wait (&thread->wait, &wait, TASK_INTERRUPTIBLE); if (binder_has_work_ilocked (thread, do_proc_work)) break ; ... schedule (); ... return ret; }

是否休眠取决于 binder_has_work_ilocked() 是否返回 true,返回 true 的话就直接跳出循环,进程不休眠,看一下 binder_has_work_ilocked():

1 2 3 4 5 6 7 8 9 10 static bool binder_has_work_ilocked (struct binder_thread *thread, bool do_proc_work) ... return thread->process_todo || thread->looper_need_return || (do_proc_work && !binder_worklist_empty_ilocked (&thread->proc->todo)); }

因为在 binder_trancaction() 中配置了当前调用线程的 thread->process_todo = true;,所以 binder_has_work_ilocked() 返回 true,此次 binder_wait_for_work() 中暂时并未休眠

c.7 服务端处理 BR_TRANSACTION_COMPLETE 命令 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 static int binder_thread_read (...) { ... w = binder_dequeue_work_head_ilocked(list ); if (binder_worklist_empty_ilocked(&thread->todo)) thread->process_todo = false ; case BINDER_WORK_TRANSACTION_COMPLETE: ... else cmd = BR_TRANSACTION_COMPLETE; ... if (put_user(cmd, (uint32_t __user *)ptr)) { }

注意,从 todo 队列取出 binder_work 对象后,todo 队列就会删除这个 binder_work 对象,此时 todo 队列就一个 binder_work 对象,所以 binder_worklist_empty_ilocked() 返回 true,thread->process_todo = false

1 2 3 4 5 6 7 8 9 10 11 12 status_t IPCThreadState::waitForResponse (Parcel *reply, status_t *acquireResult) ... while (1 ) { if ((err=talkWithDriver ()) < NO_ERROR) break ; switch (cmd) { case BR_TRANSACTION_COMPLETE: if (!reply && !acquireResult) goto finish; break ;

处理 BR_TRANCACTION_COMPLETE 命令,也没有重要工作,再回到 while 循环,又进入 talkWithDriver(),mIn.size = 256,mOut.size = 0,进入驱动执行 binder_thread_read()。

c.8 服务端挂起 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 static int binder_thread_read (struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { ... wait_for_proc_work = binder_available_for_proc_work_ilocked(thread); ... if (wait_for_proc_work) { ... } if (non_block) { ... } else { ret = binder_wait_for_work(thread, wait_for_proc_work); } static int binder_wait_for_work (struct binder_thread *thread, bool do_proc_work) { ... for (;;) { prepare_to_wait(&thread->wait, &wait, TASK_INTERRUPTIBLE); if (binder_has_work_ilocked(thread, do_proc_work)) break ; ... schedule(); ... } ... }

wait_for_proc_work = false,进入 binder_wait_for_work(),回忆一下[c.7 小节](# c.7 服务端处理 BR_TRANSACTION_COMPLETE 命令) 中 thread->process_todo = false ,所以 sm 在此处挂起!!!

主要做了三件事:

将 tcomplete 加入到当前调用线程(sm)待处理的任务队列 将 t 加入到目标(和 sm 通信的 client)的处理队列中 wake_up_interruptible_sync():唤醒客户端进程

接下来继续看 client 进程,从前文分析得知,client 进程也是在 binder_wait_for_work() 出挂起,唤醒后继续往下执行。

d. 客户端继续执行 - 把 BR_TRANSACTION_COMPLETE/BR_REPLY 写入用户空间 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 static int binder_thread_read (struct binder_proc *proc,...) ... ret = binder_wait_for_work(thread, wait_for_proc_work); } while (1 ) { ... w = binder_dequeue_work_head_ilocked(list ); switch (w->type) { case BINDER_WORK_TRANSACTION: { binder_inner_proc_unlock(proc); t = container_of(w, struct binder_transaction, work); } break ; ... case BINDER_WORK_TRANSACTION_COMPLETE: case BINDER_WORK_TRANSACTION_ONEWAY_SPAM_SUSPECT: { if (proc->oneway_spam_detection_enabled && w->type == BINDER_WORK_TRANSACTION_ONEWAY_SPAM_SUSPECT) cmd = BR_ONEWAY_SPAM_SUSPECT; else cmd = BR_TRANSACTION_COMPLETE; ... kfree(w); binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE); if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof (uint32_t ); ... } break ; ... } if (!t) continue ; if (t->buffer->target_node) { ... } else { trd->target.ptr = 0 ; trd->cookie = 0 ; cmd = BR_REPLY; } ... if (put_user(cmd, (uint32_t __user *)ptr)) { if (t_from) binder_thread_dec_tmpref(t_from); binder_cleanup_transaction(t, "put_user failed" , BR_FAILED_REPLY); return -EFAULT; } ptr += sizeof (uint32_t ); if (copy_to_user(ptr, &tr, trsize)) { if (t_from) binder_thread_dec_tmpref(t_from); binder_cleanup_transaction(t, "copy_to_user failed" , BR_FAILED_REPLY); return -EFAULT; }

客户端调用 binder_transaction() 时,客户端的 todo 队列添加了 BINDER_WORK_TRANSACTION_COMPLETE 命令,

sm 处理完数据向驱动发送 BC_REPLY 命令时也调用了 binder_transaction(),又向目标进程(之前和 sm 通信的进程,也就是客户端)的 todo 队列添加了 BINDER_WORK_TRANSACTION,所以现在客户端的 todo 链表有两个 binder_work,BINDER_WORK_TRANSACTION_COMPLETE 和 BINDER_WORK_TRANSACTION,在处理 BINDER_WORK_TRANSACTION_COMPLETE 时,t 还是 NULL,执行完 switch 语句后,直接就 continue 返回循环执行下一条 binder_work了,也就是 BINDER_WORK_TRANSACTION;

sm 执行 binder_transaction() 时并未给 target_node 赋值,所以这次 t->buffer->target_node 就是空值了,进入 else 分支,传递 BR_REPLY 给 cmd,接下来把 BR_REPLY 写入用户空间的 ptr(read_buffer),把 binder_transaction_data_secctx 拷贝到用户空间的 ptr(read_buffer),总结下来就是三件事:

把 BR_TRANSACTION_COMPLETE 写入 read_buffer

把 BR_REPLY 写入 read_buffer

把 binder_transaction_data_secctx 写入 read_buffer

到这里客户端的 binder_ioctl_write_read() 就执行完了,回到 talkWithDriver(),talkWithDriver() 没做什么有用的事,继续回到 waitForResponse()。

1.2.2.2.2 处理 binder 驱动发来的命令 - BR_TRANSACTION_COMPLETE/BR_REPLY 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 status_t IPCThreadState::waitForResponse (Parcel *reply, status_t *acquireResult) uint32_t cmd; int32_t err; while (1 ) { if ((err=talkWithDriver ()) < NO_ERROR) break ; ... cmd = (uint32_t )mIn.readInt32 (); ... switch (cmd) { ... case BR_TRANSACTION_COMPLETE: if (!reply && !acquireResult) goto finish; break ; ... case BR_REPLY: { binder_transaction_data tr; err = mIn.read (&tr, sizeof (tr)); ... if (reply) { if ((tr.flags & TF_STATUS_CODE) == 0 ) { reply->ipcSetDataReference ( reinterpret_cast <const uint8_t *>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast <const binder_size_t *>(tr.data.ptr.offsets), tr.offsets_size/sizeof (binder_size_t ), freeBuffer); } ...

非 ONEWAY 模式,BR_TRANSACTION_COMPLETE 分支什么也没做,BR_REPLY 分支调用了 Parcel.ipcSetDataReference(),主要作用就是根据参数的值重新初始化 Parcel 的数据和对象,客户端后续就可以使用 Parcel 提供的函数从中读取数据。

到这里 AMS 注册到 SM 的过程就结束了。

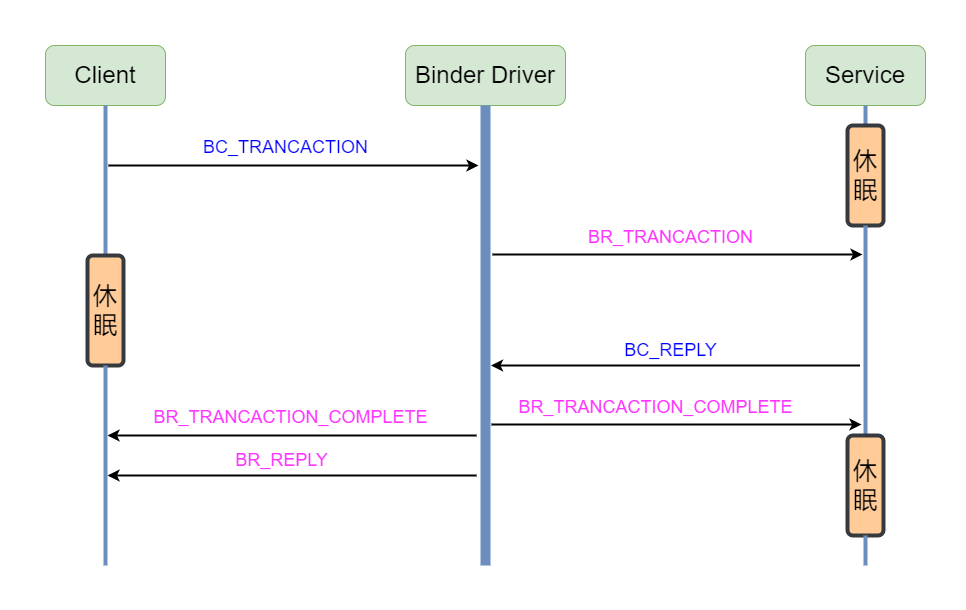

2. IPC 命令流程图 IPC 命令流程图

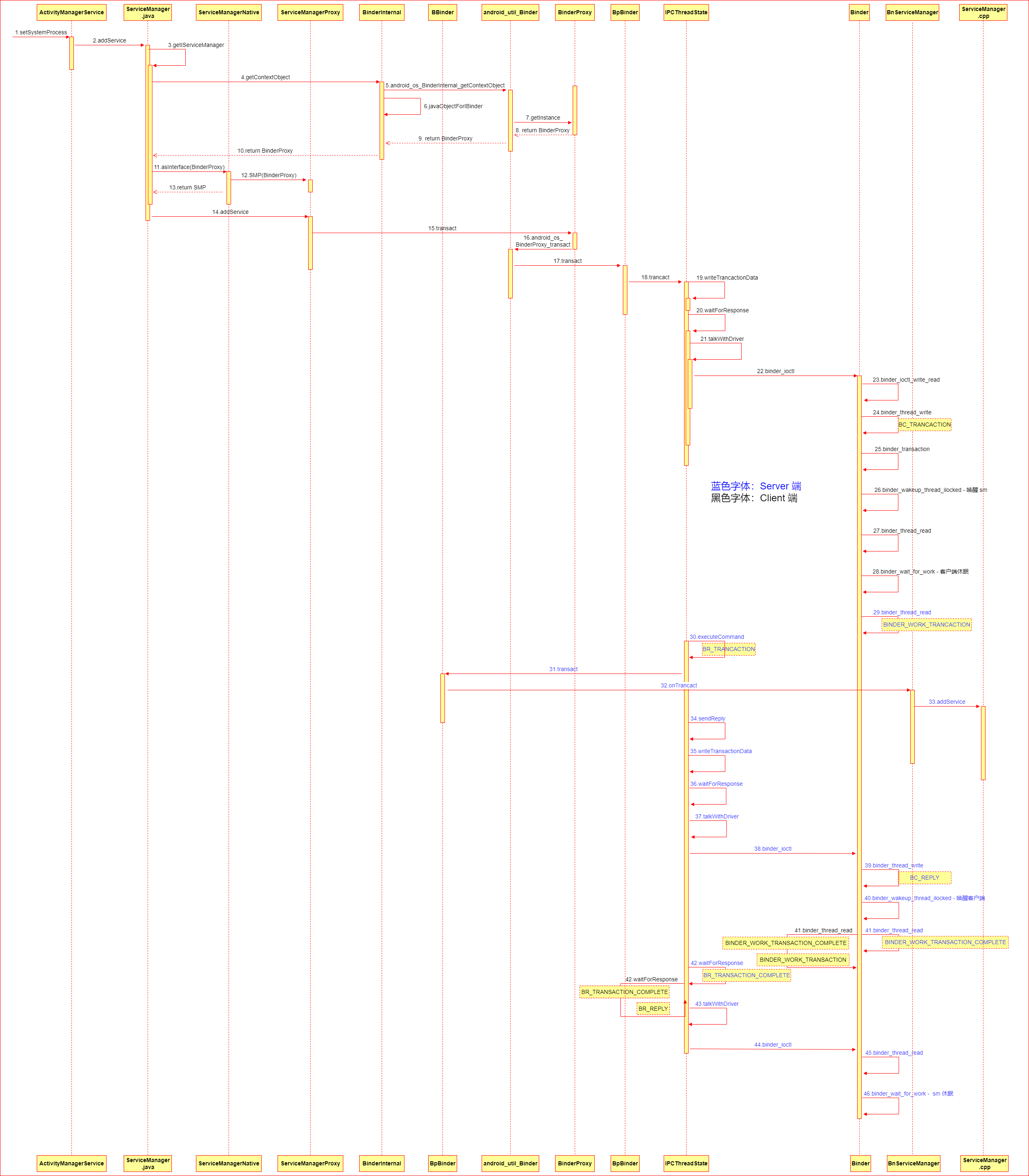

3. AMS 注册时序图 Binder_AMS注册时序图

4. 总结 getIServiceManager() 主要工作:

BinderInternal.getContextObject():返回一个封装了 BpBinder(handle == 0) 的 BinderProxy 对象,BinderProxy 对象包含一个 mNativeData 的 long 型地址,其对应 native 层的 BinderProxyNativeData 结构体变量指针,这个结构体第一个参数是 mObject,指向 BpBinder(handle == 0);

ServiceManagerNative.asInterface():获取 ServiceManagerProxy 对象,参数是 BinderProxy。

addService() 主要工作:

writeTransactionData:打包数据和命令到 mOut;

waitForResponse:写入数据和 BC_TRANSACTION

talkWithDriver():根据 mIn.size 和 mOut.size 判断是否要执行 ioctl

客户端 binder_thread_write():处理 BC_TRANSACTION 命令,

客户端 binder_transaction():找到目标进程 sm 并向其传递传输 BINDER_WORK_TRANSACTION 命令和数据,向调用线程(客户端)传递 BINDER_WORK_TRANSACTION_COMPLETE 命令,唤醒 sm

a. 客户端 binder_thread_read():客户端进程挂起

b. 服务端 binder_thread_read():服务端处理命令 BINDER_WORK_TRANSACTION,驱动向服务端用户空间传递 BR_TRANSACTION

服务端 handleEvent():获取并处理 BR_TRANSACTION 命令;

服务端 transact()/onTransact():处理 TRANSACTION_addService 并返回 reply;

服务端 sendReply():向驱动发送 BC_REPLY

服务端 binder_thread_write():处理 BC_REPLY 命令;

服务端 binder_transaction():找到客户端进程并向其传递 BINDER_WORK_TRANSACTION 命令和数据,向调用线程(sm)传递 BINDER_WORK_TRANSACTION_COMPLETE 命令,唤醒客户端进程

服务端 binder_thread_read():处理 BINDER_WORK_TRANSACTION_COMPLETE,驱动向 sm 用户空间发送 BR_TRANCACTION_COMPLETE

服务端 waitForResponse():处理 BR_TRANCACTION_COMPLETE 命令

c. 服务端 binder_thread_read():服务端挂起

d. 客户端 binder_thread_read():客户端处理命令 BINDER_WORK_TRANSACTION_COMPLETE 和 BINDER_WORK_TRANSACTION 命令,驱动向客户端用户空间传递 BR_TRANSACTION_COMPLETE BR_REPLY

客户端 waitForResponse():处理 BR_TRANSACTION_COMPLETE 和 BR_REPLY 命令;

a 和 b 同时进行,c 和 d 同时进行,无先后顺序;